前言 手头有台闲置的 X99 双路服务器(E5-2696 v4 x2,88线程,128G 内存),之前一直吃灰。最近想搞个 OpenStack 环境练练手,不想用 DevStack 那种 all-in-one 的玩具方案——太假了,跟生产环境完全两码事。

所以决定硬刚:5 台虚拟机,每个组件单独部署,模拟真实的多节点拓扑 。

折腾了一整天,踩了不少坑,这里记录一下完整过程。

硬件和规划 宿主机 x99

项目

配置

CPU

2x Intel Xeon E5-2696 v4 (44核88线程)

内存

128 GB DDR4 ECC

存储

~900 GB ZFS

系统

Ubuntu 24.04.2 LTS

IP

192.168.71.65

88 个线程 + 128G 内存,跑 5 个虚拟机绰绰有余。

架构设计 思路是:宿主机只装 KVM 和 Docker,尽量保持干净 。基础设施(数据库、消息队列、缓存)用 Docker 跑在宿主机上,OpenStack 各组件分散到 5 个 VM 里:

1 2 3 4 5 6 7 8 9 10 11 12 物理网络: 192.168.71.0/24 (网关 .1) 浮动 IP 池: 192.168.71.200-250 x99 宿主机 (.65) ├── Docker: MariaDB + RabbitMQ + Memcached ├── KVM/libvirt + br0 网桥 │ ├── os-ctrl (.71) — Keystone, Glance, Placement, Horizon ├── os-net (.72) — Neutron 全家桶 ├── os-nova (.73) — Nova 控制面 (API/调度器/VNC) ├── os-comp1 (.74) — Nova 计算节点 └── os-comp2 (.75) — Nova 计算节点

VM 资源分配

VM

角色

vCPU

内存

磁盘

IP

os-ctrl

Keystone + Glance + Placement + Horizon

4

8G

50G

.71

os-net

Neutron 全部 agent

4

8G

30G

.72

os-nova

Nova API/调度器/conductor/VNC

4

8G

30G

.73

os-comp1

Nova 计算

8

16G

80G

.74

os-comp2

Nova 计算

8

16G

80G

.75

合计 28 56G 270G

还剩 60 个线程和 72G 内存,完全不慌。

第一步:宿主机准备 安装 Docker 没啥好说的,标准流程:

1 2 3 4 5 sudo apt-get update && sudo apt-get install -y ca-certificates curlsudo install -m 0755 -d /etc/apt/keyringscurl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /etc/apt/keyrings/docker.gpg echo "deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.gpg] https://download.docker.com/linux/ubuntu $(. /etc/os-release && echo $VERSION_CODENAME) stable" | sudo tee /etc/apt/sources.list.d/docker.listsudo apt-get update && sudo apt-get install -y docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin

安装 KVM/libvirt 1 2 3 sudo apt-get install -y qemu-kvm libvirt-daemon-system libvirt-clients virtinst bridge-utils cpu-checkersudo systemctl enable --now libvirtdsudo usermod -aG libvirt ubuntu

开启嵌套虚拟化 X99 是 Intel 平台,启用 kvm_intel 的嵌套支持:

1 2 3 echo 'options kvm_intel nested=1' | sudo tee /etc/modprobe.d/kvm-nested.confsudo modprobe -r kvm_intel && sudo modprobe kvm_intel nested=1cat /sys/module/kvm_intel/parameters/nested

后面会发现这个嵌套虚拟化其实有坑,先按下不表。

配置 br0 网桥 这一步非常关键 ,也是最容易翻车的地方。要把物理网卡 enp6s0 塞进网桥,改成静态 IP。

写 /etc/netplan/01-bridge.yaml:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 network: version: 2 renderer: NetworkManager ethernets: enp6s0: dhcp4: false bridges: br0: interfaces: [enp6s0 ] addresses: [192.168 .71 .65 /24 ] routes: - to: default via: 192.168 .71 .1 nameservers: addresses: [8.8 .8 .8 , 1.1 .1 .1 ] dhcp4: false

千万用 netplan try 不要直接 netplan apply! netplan try 会在 120 秒后自动回滚,万一配错了不至于失联:

1 2 sudo netplan try sudo netplan apply

顺手把 libvirt 默认的 NAT 网络关掉,我们用自己的网桥:

1 2 sudo virsh net-destroy defaultsudo virsh net-autostart --disable default

安装 Vagrant + vagrant-libvirt 为什么用 Vagrant?因为手动 virt-install 创建 5 个 VM 太累了,cloud-init 配置也很烦。Vagrant 一个文件搞定:

1 2 3 4 5 wget -O- https://apt.releases.hashicorp.com/gpg | sudo gpg --dearmor -o /usr/share/keyrings/hashicorp-archive-keyring.gpg echo 'deb [signed-by=/usr/share/keyrings/hashicorp-archive-keyring.gpg] https://apt.releases.hashicorp.com noble main' | sudo tee /etc/apt/sources.list.d/hashicorp.listsudo apt-get update && sudo apt-get install -y vagrantsudo apt-get install -y libvirt-devvagrant plugin install vagrant-libvirt

第二步:基础设施容器 OpenStack 依赖三个基础服务:MariaDB、RabbitMQ、Memcached。与其在每台 VM 上装,不如直接在宿主机上用 Docker 跑,所有 VM 统一连宿主机。

创建 /opt/openstack-infra/docker-compose.yml:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 services: mariadb: image: mariadb:11 restart: unless-stopped environment: MYSQL_ROOT_PASSWORD: openstack ports: - "192.168.71.65:3306:3306" volumes: - mariadb_data:/var/lib/mysql - ./init-db.sql:/docker-entrypoint-initdb.d/init-db.sql:ro command: > --character-set-server=utf8mb4 --collation-server=utf8mb4_general_ci --max-connections=4096 rabbitmq: image: rabbitmq:3-management restart: unless-stopped environment: RABBITMQ_DEFAULT_USER: openstack RABBITMQ_DEFAULT_PASS: openstack ports: - "192.168.71.65:5672:5672" - "192.168.71.65:15672:15672" volumes: - rabbitmq_data:/var/lib/rabbitmq memcached: image: memcached:1.6 restart: unless-stopped ports: - "192.168.71.65:11211:11211" command: memcached -m 512 volumes: mariadb_data: rabbitmq_data:

注意端口都绑定到 192.168.71.65 而不是 0.0.0.0,因为这些服务只需要内网 VM 能访问。

数据库初始化脚本 init-db.sql,提前把所有库和用户建好:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 CREATE DATABASE IF NOT EXISTS keystone;GRANT ALL PRIVILEGES ON keystone.* TO 'keystone' @'%' IDENTIFIED BY 'openstack' ;CREATE DATABASE IF NOT EXISTS glance;GRANT ALL PRIVILEGES ON glance.* TO 'glance' @'%' IDENTIFIED BY 'openstack' ;CREATE DATABASE IF NOT EXISTS placement;GRANT ALL PRIVILEGES ON placement.* TO 'placement' @'%' IDENTIFIED BY 'openstack' ;CREATE DATABASE IF NOT EXISTS nova_api;CREATE DATABASE IF NOT EXISTS nova;CREATE DATABASE IF NOT EXISTS nova_cell0;GRANT ALL PRIVILEGES ON nova_api.* TO 'nova' @'%' IDENTIFIED BY 'openstack' ;GRANT ALL PRIVILEGES ON nova.* TO 'nova' @'%' IDENTIFIED BY 'openstack' ;GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova' @'%' IDENTIFIED BY 'openstack' ;CREATE DATABASE IF NOT EXISTS neutron;GRANT ALL PRIVILEGES ON neutron.* TO 'neutron' @'%' IDENTIFIED BY 'openstack' ;FLUSH PRIVILEGES;

启动:

1 2 cd /opt/openstack-infrasudo docker compose up -d

等 MariaDB 起来后验证一下:

1 sudo docker exec openstack-infra-mariadb-1 mariadb -uroot -popenstack -e 'SHOW DATABASES'

第三步:Vagrant 创建 5 台 VM 直接上 Vagrantfile,一次性创建 5 台 Ubuntu 24.04 虚拟机:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 Vagrant .configure("2" ) do |config | config.vm.box = "cloud-image/ubuntu-24.04" HOSTS_CONTENT = <<~HOSTS 127.0.0.1 localhost 192.168.71.65 x99 192.168.71.71 os-ctrl 192.168.71.72 os-net 192.168.71.73 os-nova 192.168.71.74 os-comp1 192.168.71.75 os-comp2 HOSTS HOST_SSH_KEY = File .read(File .expand_path("~/.ssh/id_ed25519.pub" )).strip rescue File .read(File .expand_path("~/.ssh/id_rsa.pub" )).strip VMS = { "os-ctrl" => { ip: "192.168.71.71" , cpus: 4 , memory: 8192 , disk: "50G" }, "os-net" => { ip: "192.168.71.72" , cpus: 4 , memory: 8192 , disk: "30G" }, "os-nova" => { ip: "192.168.71.73" , cpus: 4 , memory: 8192 , disk: "30G" }, "os-comp1" => { ip: "192.168.71.74" , cpus: 8 , memory: 16384 , disk: "80G" }, "os-comp2" => { ip: "192.168.71.75" , cpus: 8 , memory: 16384 , disk: "80G" }, } VMS .each do |name, spec | config.vm.define name do |node | node.vm.hostname = name node.vm.network :public_network , dev: "br0" , mode: "bridge" , type: "bridge" , ip: spec[:ip ], netmask: "255.255.255.0" node.vm.provider :libvirt do |lv | lv.cpus = spec[:cpus ] lv.memory = spec[:memory ] lv.machine_virtual_size = spec[:disk ].to_i lv.nested = true if name.start_with?("os-comp" ) lv.cpu_mode = "host-passthrough" if name.start_with?("os-comp" ) end node.vm.provision "shell" , inline: <<~SHELL cat > /etc/hosts <<'EOF' #{HOSTS_CONTENT } EOF mkdir -p /home/ubuntu/.ssh echo "#{HOST_SSH_KEY } " >> /home/ubuntu/.ssh/authorized_keys sort -u -o /home/ubuntu/.ssh/authorized_keys /home/ubuntu/.ssh/authorized_keys chown -R ubuntu:ubuntu /home/ubuntu/.ssh chmod 700 /home/ubuntu/.ssh && chmod 600 /home/ubuntu/.ssh/authorized_keys ip route replace default via 192.168.71.1 dev eth1 || true growpart /dev/vda 1 2>/dev/null || true resize2fs /dev/vda1 2>/dev/null || xfs_growfs / 2>/dev/null || true SHELL end end end

几个细节:

计算节点开了 lv.nested = true 和 cpu_mode = "host-passthrough",目的是让 VM 里也能用 KVM(嵌套虚拟化)

每台 VM 有两个网卡:eth0 是 Vagrant 管理网络(NAT),eth1 是桥接到 br0 的业务网络

provision 脚本里 ip route replace default via 192.168.71.1 dev eth1 是为了确保默认路由走桥接网络而不是 NAT

growpart + resize2fs 自动扩展磁盘到分配的大小

1 2 3 mkdir -p ~/openstack-vms && cd ~/openstack-vmsvagrant up

喝杯咖啡等一会儿,5 台 VM 就起来了。验证一下:

1 2 3 for ip in 71 72 73 74 75; do ssh -o StrictHostKeyChecking=no [email protected] .$ip hostname done

第四步:Keystone(认证服务) Keystone 是 OpenStack 的认证核心,所有服务都依赖它,必须第一个装。

1 2 3 sudo apt-get updatesudo apt-get install -y keystone python3-openstackclient apache2 libapache2-mod-wsgi-py3 crudini

后面大量用 crudini 来改配置文件,比 sed 靠谱多了。

配置 /etc/keystone/keystone.conf:

1 2 3 4 5 6 sudo crudini --set /etc/keystone/keystone.conf database connection \ 'mysql+pymysql://keystone:[email protected] /keystone' sudo crudini --set /etc/keystone/keystone.conf token provider fernetsudo crudini --set /etc/keystone/keystone.conf cache enabled true sudo crudini --set /etc/keystone/keystone.conf cache backend dogpile.cache.memcachedsudo crudini --set /etc/keystone/keystone.conf cache memcache_servers 192.168.71.65:11211

初始化数据库和引导:

1 2 3 4 5 6 7 8 sudo su -s /bin/bash keystone -c 'keystone-manage db_sync' sudo keystone-manage fernet_setup --keystone-user keystone --keystone-group keystonesudo keystone-manage credential_setup --keystone-user keystone --keystone-group keystonesudo keystone-manage bootstrap --bootstrap-password openstack \ --bootstrap-admin-url http://192.168.71.71:5000/v3/ \ --bootstrap-internal-url http://192.168.71.71:5000/v3/ \ --bootstrap-public-url http://192.168.71.71:5000/v3/ \ --bootstrap-region-id RegionOne

配置 Apache 并重启:

1 2 echo 'ServerName os-ctrl' | sudo tee -a /etc/apache2/apache2.confsudo systemctl restart apache2

写环境变量文件 ~/admin-openrc:

1 2 3 4 5 6 7 8 export OS_PROJECT_DOMAIN_NAME=Defaultexport OS_USER_DOMAIN_NAME=Defaultexport OS_PROJECT_NAME=adminexport OS_USERNAME=adminexport OS_PASSWORD=openstackexport OS_AUTH_URL=http://192.168.71.71:5000/v3export OS_IDENTITY_API_VERSION=3export OS_IMAGE_API_VERSION=2

验证:

1 2 source ~/admin-openrcopenstack token issue

看到 token 信息就说明 Keystone 没问题了。

创建项目、用户和服务端点 这一堆命令比较枯燥但必须做,每个 OpenStack 服务都需要在 Keystone 里注册:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 source ~/admin-openrcopenstack project create --domain default --description 'Service Project' service openstack project create --domain default --description 'Demo Project' demo openstack user create --domain default --password openstack demo openstack role add --project demo --user demo member for svc in glance placement nova neutron; do openstack user create --domain default --password openstack $svc openstack role add --project service --user $svc admin done openstack service create --name glance --description 'OpenStack Image' image for iface in public internal admin; do openstack endpoint create --region RegionOne image $iface http://192.168.71.71:9292 done openstack service create --name placement --description 'Placement API' placement for iface in public internal admin; do openstack endpoint create --region RegionOne placement $iface http://192.168.71.71:8778 done openstack service create --name nova --description 'OpenStack Compute' compute for iface in public internal admin; do openstack endpoint create --region RegionOne compute $iface http://192.168.71.73:8774/v2.1 done openstack service create --name neutron --description 'OpenStack Networking' network for iface in public internal admin; do openstack endpoint create --region RegionOne network $iface http://192.168.71.72:9696 done

第五步:Glance(镜像服务) 在 os-ctrl 上:

1 sudo apt-get install -y glance

配置 /etc/glance/glance-api.conf(省略重复的 keystone_authtoken,都是一个套路):

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 sudo crudini --set /etc/glance/glance-api.conf database connection \ 'mysql+pymysql://glance:[email protected] /glance' sudo crudini --set /etc/glance/glance-api.conf keystone_authtoken www_authenticate_uri http://192.168.71.71:5000sudo crudini --set /etc/glance/glance-api.conf keystone_authtoken auth_url http://192.168.71.71:5000sudo crudini --set /etc/glance/glance-api.conf keystone_authtoken memcached_servers 192.168.71.65:11211sudo crudini --set /etc/glance/glance-api.conf keystone_authtoken auth_type passwordsudo crudini --set /etc/glance/glance-api.conf keystone_authtoken project_domain_name Defaultsudo crudini --set /etc/glance/glance-api.conf keystone_authtoken user_domain_name Defaultsudo crudini --set /etc/glance/glance-api.conf keystone_authtoken project_name servicesudo crudini --set /etc/glance/glance-api.conf keystone_authtoken username glancesudo crudini --set /etc/glance/glance-api.conf keystone_authtoken password openstacksudo crudini --set /etc/glance/glance-api.conf paste_deploy flavor keystonesudo crudini --set /etc/glance/glance-api.conf DEFAULT enabled_backends 'fs:file' sudo crudini --set /etc/glance/glance-api.conf glance_store default_backend fssudo crudini --set /etc/glance/glance-api.conf fs filesystem_store_datadir /var/lib/glance/images/

1 2 sudo su -s /bin/bash glance -c 'glance-manage db_sync' sudo systemctl restart glance-api && sudo systemctl enable glance-api

上传 cirros 测试镜像:

1 2 3 4 5 wget -q http://download.cirros-cloud.net/0.6.2/cirros-0.6.2-x86_64-disk.img -O /tmp/cirros.img source ~/admin-openrcopenstack image create 'cirros-0.6.2' \ --file /tmp/cirros.img --disk-format qcow2 --container-format bare --public openstack image list

第六步:Placement 还是在 os-ctrl 上,Placement 比较简单:

1 sudo apt-get install -y placement-api

配置 /etc/placement/placement.conf:

1 2 3 4 sudo crudini --set /etc/placement/placement.conf placement_database connection \ 'mysql+pymysql://placement:[email protected] /placement' sudo crudini --set /etc/placement/placement.conf api auth_strategy keystone

1 2 sudo su -s /bin/bash placement -c 'placement-manage db sync' sudo systemctl restart apache2

验证:

1 openstack resource class list | head -5

第七步:Neutron(网络服务) 这是整个部署里最复杂的部分,要配 6 个文件。在 os-net (.72) 上操作:

1 2 3 4 sudo apt-get updatesudo apt-get install -y neutron-server neutron-plugin-ml2 \ neutron-linuxbridge-agent neutron-l3-agent \ neutron-dhcp-agent neutron-metadata-agent crudini

检测网卡名 vagrant-libvirt 创建的 VM 有两个网卡,桥接网卡不一定叫 eth1,先确认一下:

1 2 PHYS_IF=$(ip -o route get 192.168.71.1 | awk '{print $5}' ) echo "桥接网卡: $PHYS_IF "

neutron.conf 1 2 3 4 5 6 7 8 9 10 11 sudo crudini --set /etc/neutron/neutron.conf database connection \ 'mysql+pymysql://neutron:[email protected] /neutron' sudo crudini --set /etc/neutron/neutron.conf DEFAULT core_plugin ml2sudo crudini --set /etc/neutron/neutron.conf DEFAULT service_plugins routersudo crudini --set /etc/neutron/neutron.conf DEFAULT transport_url \ 'rabbit://openstack:[email protected] ' sudo crudini --set /etc/neutron/neutron.conf DEFAULT auth_strategy keystonesudo crudini --set /etc/neutron/neutron.conf DEFAULT notify_nova_on_port_status_changes true sudo crudini --set /etc/neutron/neutron.conf DEFAULT notify_nova_on_port_data_changes true

ml2_conf.ini 1 2 3 4 5 6 sudo crudini --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 type_drivers 'flat,vxlan' sudo crudini --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 tenant_network_types vxlansudo crudini --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 mechanism_drivers 'linuxbridge,l2population' sudo crudini --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 extension_drivers port_securitysudo crudini --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2_type_flat flat_networks providersudo crudini --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2_type_vxlan vni_ranges 1:1000

linuxbridge_agent.ini 1 2 3 4 5 6 7 8 sudo crudini --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini \ linux_bridge physical_interface_mappings "provider:$PHYS_IF " sudo crudini --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini vxlan enable_vxlan true sudo crudini --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini vxlan local_ip 192.168.71.72sudo crudini --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini vxlan l2_population true sudo crudini --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini securitygroup enable_security_group true sudo crudini --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini securitygroup firewall_driver \ neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

1 2 3 4 5 6 sudo crudini --set /etc/neutron/l3_agent.ini DEFAULT interface_driver linuxbridgesudo crudini --set /etc/neutron/dhcp_agent.ini DEFAULT interface_driver linuxbridgesudo crudini --set /etc/neutron/dhcp_agent.ini DEFAULT dhcp_driver neutron.agent.linux.dhcp.Dnsmasqsudo crudini --set /etc/neutron/dhcp_agent.ini DEFAULT enable_isolated_metadata true sudo crudini --set /etc/neutron/metadata_agent.ini DEFAULT nova_metadata_host 192.168.71.73sudo crudini --set /etc/neutron/metadata_agent.ini DEFAULT metadata_proxy_shared_secret openstack

内核参数 1 2 3 4 sudo modprobe br_netfilterecho 'br_netfilter' | sudo tee /etc/modules-load.d/br_netfilter.confsudo sysctl -w net.bridge.bridge-nf-call-iptables=1sudo sysctl -w net.bridge.bridge-nf-call-ip6tables=1

同步数据库并启动 1 2 3 4 5 6 7 8 sudo su -s /bin/bash neutron -c \ 'neutron-db-manage --config-file /etc/neutron/neutron.conf \ --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head' sudo systemctl restart neutron-server neutron-linuxbridge-agent \ neutron-dhcp-agent neutron-metadata-agent neutron-l3-agent sudo systemctl enable neutron-server neutron-linuxbridge-agent \ neutron-dhcp-agent neutron-metadata-agent neutron-l3-agent

验证:

1 2 openstack network agent list

应该看到 4 个 agent 都在 os-net 上活着。

第八步:Horizon(Dashboard) 在 os-ctrl 上装 Horizon,这步最简单:

1 sudo apt-get install -y openstack-dashboard

改 /etc/openstack-dashboard/local_settings.py:

1 2 3 sudo sed -i "s/^OPENSTACK_HOST = .*/OPENSTACK_HOST = '192.168.71.71'/" /etc/openstack-dashboard/local_settings.pysudo sed -i "s/^ALLOWED_HOSTS = .*/ALLOWED_HOSTS = ['*']/" /etc/openstack-dashboard/local_settings.pysudo sed -i "s|^OPENSTACK_KEYSTONE_URL = .*|OPENSTACK_KEYSTONE_URL = 'http://192.168.71.71:5000/v3'|" /etc/openstack-dashboard/local_settings.py

Memcached session 后端指向宿主机:

1 2 3 4 5 6 7 CACHES = { 'default' : { 'BACKEND' : 'django.core.cache.backends.memcached.PyMemcacheCache' , 'LOCATION' : '192.168.71.65:11211' , } } SESSION_ENGINE = 'django.contrib.sessions.backends.cache'

1 sudo systemctl reload apache2

打开 http://192.168.71.71/horizon,用 admin/openstack 登录。

第九步:Nova 控制面 在 os-nova (.73) 上:

1 2 sudo apt-get updatesudo apt-get install -y nova-api nova-conductor nova-scheduler nova-novncproxy crudini

Nova 的配置项最多,/etc/nova/nova.conf 要配的 section 有一堆:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 sudo crudini --set /etc/nova/nova.conf DEFAULT my_ip 192.168.71.73sudo crudini --set /etc/nova/nova.conf DEFAULT transport_url 'rabbit://openstack:[email protected] ' sudo crudini --set /etc/nova/nova.conf api_database connection 'mysql+pymysql://nova:[email protected] /nova_api' sudo crudini --set /etc/nova/nova.conf database connection 'mysql+pymysql://nova:[email protected] /nova' sudo crudini --set /etc/nova/nova.conf service_user send_service_user_token true sudo crudini --set /etc/nova/nova.conf vnc enabled true sudo crudini --set /etc/nova/nova.conf vnc server_listen 0.0.0.0sudo crudini --set /etc/nova/nova.conf vnc server_proxyclient_address 192.168.71.73sudo crudini --set /etc/nova/nova.conf vnc novncproxy_base_url 'http://192.168.71.73:6080/vnc_auto.html' sudo crudini --set /etc/nova/nova.conf glance api_servers http://192.168.71.71:9292sudo crudini --set /etc/nova/nova.conf scheduler discover_hosts_in_cells_interval 300

初始化数据库和 cell 映射:

1 2 3 4 sudo su -s /bin/bash nova -c 'nova-manage api_db sync' sudo su -s /bin/bash nova -c 'nova-manage cell_v2 map_cell0' sudo su -s /bin/bash nova -c 'nova-manage cell_v2 create_cell --name=cell1 --verbose' sudo su -s /bin/bash nova -c 'nova-manage db sync'

启动服务:

1 2 sudo systemctl restart nova-api nova-scheduler nova-conductor nova-novncproxysudo systemctl enable nova-api nova-scheduler nova-conductor nova-novncproxy

第十步:Nova 计算节点 在 os-comp1 (.74) 和 os-comp2 (.75) 上分别 操作:

1 2 sudo apt-get updatesudo apt-get install -y nova-compute neutron-linuxbridge-agent crudini

/etc/nova/nova.conf 跟控制面差不多,但 my_ip 和 VNC 地址要改成本机 IP。

重点来了:virt_type 的设置。

Ubuntu 24.04 的 nova-compute 包自带一个 /etc/nova/nova-compute.conf,里面默认写了 virt_type=kvm。就算你在 nova.conf 里设了 virt_type=qemu,这个文件会覆盖掉(因为 nova-compute 启动时两个都加载,后者优先)。

所以两个文件都要改 :

1 2 3 sudo crudini --set /etc/nova/nova.conf libvirt virt_type qemusudo crudini --set /etc/nova/nova.conf libvirt cpu_mode nonesudo crudini --set /etc/nova/nova-compute.conf libvirt virt_type qemu

Neutron linuxbridge agent 也要配,跟 os-net 上类似但 local_ip 换成本机。

启动:

1 2 sudo systemctl restart nova-compute neutron-linuxbridge-agentsudo systemctl enable nova-compute neutron-linuxbridge-agent

两台都装好之后,在 os-nova 上发现新节点:

1 sudo su -s /bin/bash nova -c 'nova-manage cell_v2 discover_hosts --verbose'

验证:

1 2 3 openstack compute service list openstack network agent list

应该看到 os-comp1 和 os-comp2 都在线了。

第十一步:创建网络和启动实例 终于到了激动人心的时刻。

创建 Provider 网络(直连物理网络) 1 2 3 4 5 6 7 8 9 openstack network create --share --external \ --provider-physical-network provider \ --provider-network-type flat provider openstack subnet create --network provider \ --allocation-pool start=192.168.71.200,end=192.168.71.250 \ --dns-nameserver 8.8.8.8 \ --gateway 192.168.71.1 \ --subnet-range 192.168.71.0/24 provider-subnet

创建 Self-service 网络(VXLAN 租户网络) 1 2 3 4 5 openstack network create selfservice openstack subnet create --network selfservice \ --dns-nameserver 8.8.8.8 \ --gateway 10.0.0.1 \ --subnet-range 10.0.0.0/24 selfservice-subnet

创建路由器 1 2 3 openstack router create router openstack router set router --external-gateway provider openstack router add subnet router selfservice-subnet

安全组放通 ICMP 和 SSH 1 2 openstack security group rule create --proto icmp default openstack security group rule create --proto tcp --dst-port 22 default

创建 Flavor 1 2 3 4 5 openstack flavor create --id 0 --vcpus 1 --ram 64 --disk 1 m1.nano openstack flavor create --id 1 --vcpus 1 --ram 512 --disk 1 m1.tiny openstack flavor create --id 2 --vcpus 1 --ram 2048 --disk 20 m1.small openstack flavor create --id 3 --vcpus 2 --ram 4096 --disk 40 m1.medium openstack flavor create --id 4 --vcpus 4 --ram 8192 --disk 80 m1.large

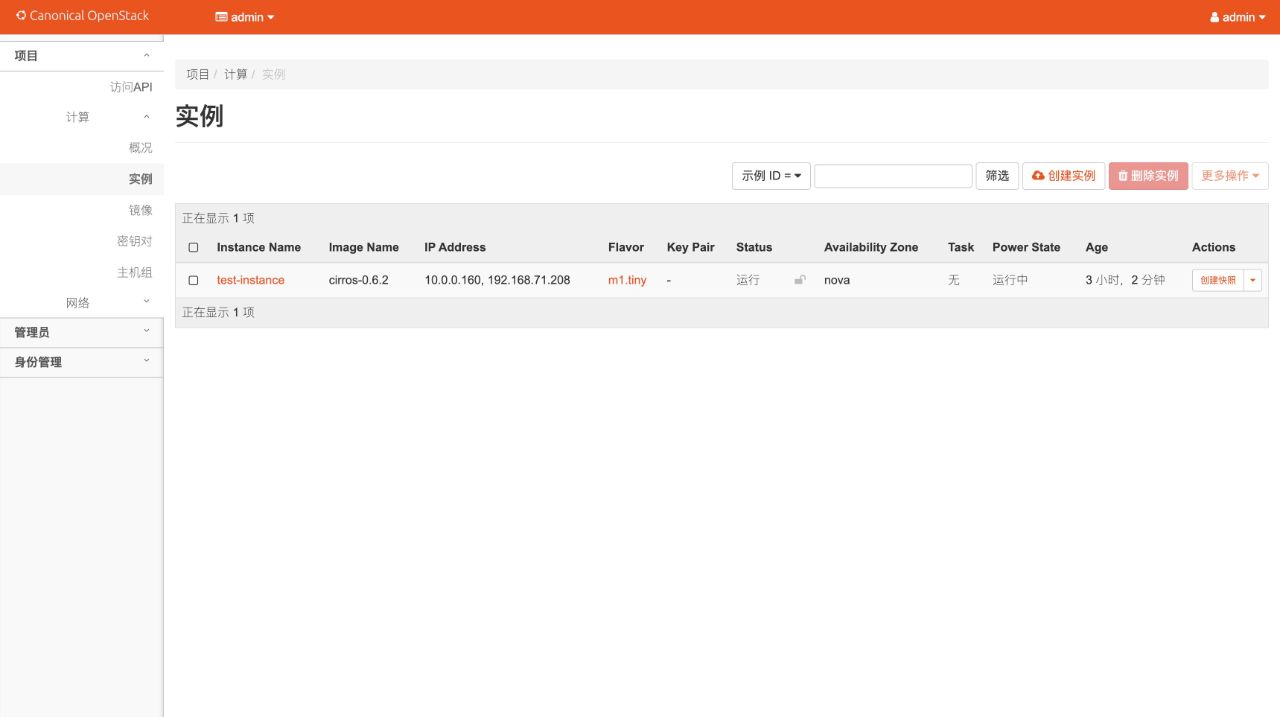

启动测试实例! 1 2 openstack server create --flavor m1.tiny --image cirros-0.6.2 \ --network selfservice --security-group default test-instance

等一会儿变成 ACTIVE 之后分配浮动 IP:

1 2 openstack floating ip create provider openstack server add floating ip test-instance 192.168.71.208

从宿主机 ping 一下:

SSH 进去看看:

能进去就说明整个链路全通了:Keystone 认证 → Glance 拉镜像 → Nova 调度到计算节点 → Neutron 分配网络 → DHCP 拿到 IP → VXLAN 隧道 → L3 路由 → 浮动 IP NAT → 宿主机可达。

踩坑记录 坑 1:嵌套 KVM 翻车 本以为计算节点开了嵌套虚拟化就能用 virt_type=kvm,结果 QEMU 8.2.2 在嵌套环境下直接崩:

1 KVM internal error. Suberror: 3

或者更离谱的:

1 ERROR:system/cpus.c:504:qemu_mutex_lock_iothread_impl: assertion failed

这是 QEMU 8.2 的已知 bug,暂时没法修。解决方案:

1 2 3 4 [libvirt] virt_type = qemucpu_mode = none

用纯软件模拟代替硬件加速。慢是慢了点,但至少能跑。

坑 2:nova-compute.conf 覆盖 nova.conf 这个坑排查了好久。明明在 nova.conf 里设了 virt_type=qemu,但创建出来的实例 XML 还是 type='kvm'。

原因是 Ubuntu 的 nova-compute 包自带 /etc/nova/nova-compute.conf,里面硬编码了 virt_type=kvm,启动时后加载的配置会覆盖前面的。两个文件都得改。

坑 3:L3 Agent iptables 权限问题 创建路由器后 L3 agent 一直报错:

1 PermissionError: [Errno 13] Permission denied: '/var/lib/neutron/tmp/neutron-iptables-qrouter-...'

原因是 lock 文件被 root 创建了,但 neutron 进程跑在 neutron 用户下。修复:

1 2 sudo chown neutron:neutron /var/lib/neutron/tmp/*sudo systemctl restart neutron-l3-agent

最终验证 全部搞完之后的状态:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 $ openstack compute service list +------+----------------+----------+------+---------+-------+ | Binary | Host | Zone | Status | State | +----------------+----------+----------+---------+-------+ | nova-scheduler | os-nova | internal | enabled | up | | nova-conductor | os-nova | internal | enabled | up | | nova-compute | os-comp1 | nova | enabled | up | | nova-compute | os-comp2 | nova | enabled | up | +----------------+----------+----------+---------+-------+ $ openstack network agent list +--------------------+----------+-------+-------+ | Agent Type | Host | Alive | State | +--------------------+----------+-------+-------+ | Linux bridge agent | os-net | :-) | UP | | Linux bridge agent | os-comp1 | :-) | UP | | Linux bridge agent | os-comp2 | :-) | UP | | DHCP agent | os-net | :-) | UP | | L3 agent | os-net | :-) | UP | | Metadata agent | os-net | :-) | UP | +--------------------+----------+-------+-------+ $ openstack server list +------+---------------+--------+--------------------------------------+--------------+---------+ | Name | Status | Networks | Image | Flavor | +---------------+--------+----------------------------------------+--------------+---------+ | test-instance | ACTIVE | selfservice=10.0.0.160, 192.168.71.208 | cirros-0.6.2 | m1.tiny | +---------------+--------+----------------------------------------+--------------+---------+

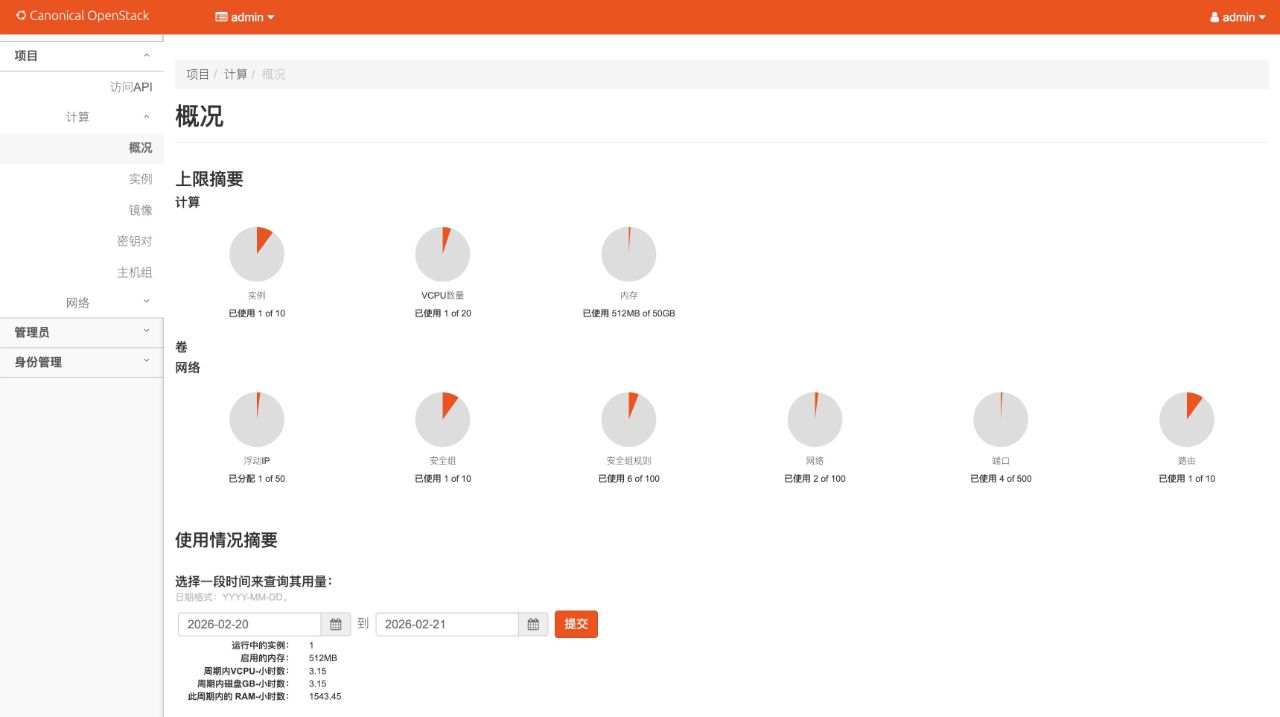

Horizon 控制台截图,一切正常运行中:

访问地址汇总

总结 整体感受:手动部署多节点 OpenStack 虽然麻烦,但确实比 DevStack 更能理解各组件之间的关系。每个服务怎么认证、消息怎么传递、网络包怎么走,全都很清楚。

几个建议给想折腾的人:

crudini 是神器 ,比 sed 改 ini 文件靠谱 100 倍Vagrant + libvirt 比手动建 VM 省太多事 ,一个文件管理所有 VM基础设施用 Docker ,省得在 VM 里装一堆数据库,也方便重置嵌套虚拟化目前不太行 (至少 QEMU 8.2 + Ubuntu 24.04 这个组合),老实用 qemu 模拟吧netplan try 救命

下次可能会试试加 Cinder(块存储)和 Swift(对象存储),或者看看能不能把 QEMU 升级到 9.x 解决嵌套虚拟化的问题。